NOTICE: The hardware components in the processor enclosures are not compatible with the components in the Dell |

EMC FC4500, FC4700, and FC4700-2 DAEs. Exchanging components between these enclosures could damage the

enclosures and void your warranty.

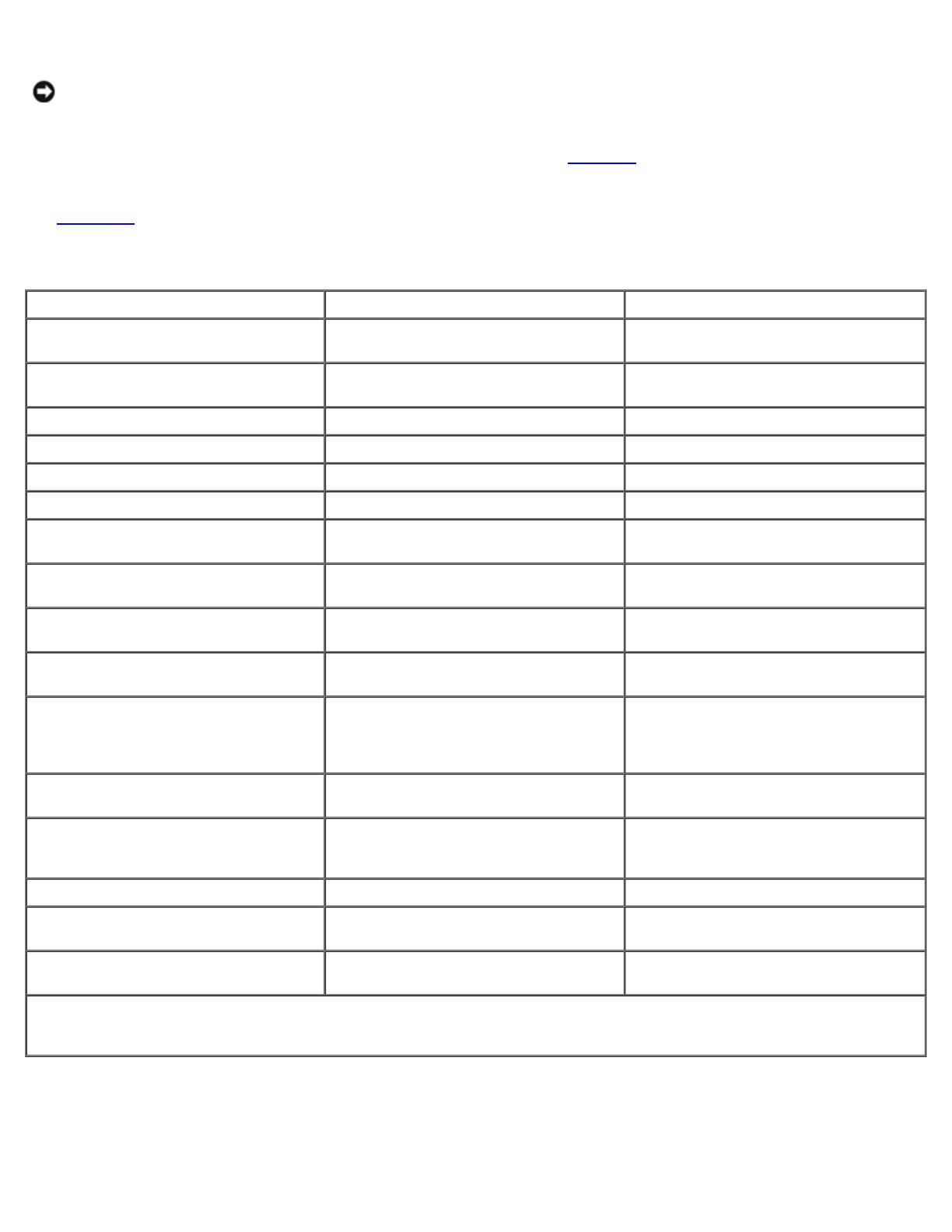

The supported processor enclosures vary in performance and configuration. Table 1-2

provides the features for each

processor enclosure.

See Table 1-13

in for more information on the supported processor enclosures.

Table 1-2. Processor Enclosure Features

CX200 CX400 CX600

3-U entry-level modular storage

system.

3-U midrange modular storage system. 4-U enterprise modular storage system.

Two Intel® 800-MHz Mobile Pentium®

III processors.

Two Intel 800-MHz Pentium III

processors.

Two Intel 2-GHz Pentium 4 processors.

1-GB cache. Up to 2-GB cache. Up to 8-GB cache.

Supports up to 15 HBAs per SP. Supports up to 15 HBAs per port Supports up to 32 HBAs per port.

Up to 200-MB/s data transfer rate. Up to 680-MB/s data transfer rate. Up to 1300-MB/s data transfer rate.

Supports up to 15 internal hard drives. Supports up to 15 internal hard drives. No internal hard drive support.

Two front-end ports in a hub

configuration that connect to the hosts.

Four front-end ports that connect to the

hosts.

Eight front-end ports that connect to

the hosts.

Two back-end ports that connect to

disks through the DAE2 enclosures.

Four back-end ports that connect to

disks through the DAE2 enclosures.

Four back-end ports that connect to

disks through the DAE2 enclosures.

Supports one DAE2 enclosure for

additional data storage.

Supports up to three DAE2 enclosures

for additional data storage.

Supports up to 16 DAE2 enclosures for

additional storage.

Supports one cluster in a direct-

attached cluster configuration.

Supports one cluster in a direct-

attached cluster configuration.

Supports up to two clusters in a direct-

attached cluster configuration.

Up to 30 drives per array with a

maximum storage capacity of 4.4 TB

(using fifteen 1-inch 146-GB hard

drives for each DAE2 enclosure).

Up to 60 drives per array with a

maximum storage capacity of 8.8 TB

(using fifteen 1-inch 146-GB hard

drives for each DAE2 enclosure).

Up to 240 drives per array with a

maximum storage capacity of 35 TB

(using fifteen 1-inch 146-GB hard drives

for each DAE2 enclosure).

Manages the RAID arrays on the DPE

and the DAE2.

Manages the RAID arrays on the DPE

and the DAE2.

Manages the RAID arrays on the DAE2.

Core software (FLARE) and vault disks

are located on the first five internal

hard drives.

Core software (FLARE) and vault disks

are located on the first five internal hard

drives.

Core software (FLARE) and vault disks

are located on the first attached DAE2

(or DAE2-OS).

Supports RAID 0, 1, 1/0, 3, and 5. Supports RAID 0, 1, 1/0, 3, and 5. Supports RAID 0, 1, 1/0, 3, and 5.

Supports nondisruptive upgrades for

online software and firmware.

Supports nondisruptive upgrades for

online software and firmware.

Supports nondisruptive upgrades for

online software and firmware.

No support for MirrorView, SnapView,

and SAN Copy.

Supports MirrorView, SnapView, and

SAN Copy.

Supports MirrorView, SnapView, and

SAN Copy.

NOTE: The core software (FLARE) contains the operating system that powers the storage system. The vault disks are the

first five hard drives in the DPE (CX200 and CX400) or the first attached DAE2 (CX600) that store unprocessed write cache

if the storage system power sources fail.

Disk Array Enclosure

The NAS cluster solution uses the DAE2—a 2-Gb/s, 3-U DAE that can be configured with up to 15 dual-ported, hot-

swappable Fibre Channel hard drives per enclosure. The DAE2 provides a scalable storage solution, allowing you to add